Artificial intelligence has become a major driver of innovation in almost every relevant industry – supporting the development of medicine, optimising transportation and logistics, automating business processes and enhancing the security of banking and insurance services. However, its rapid development comes with numerous dangers, which require appropriate actions and regulations. Find out what the AI Act is, what rules it introduces and how it can affect the development of digitally oriented companies.

Key takeaways from this article

- New regulations introduced in the EU require companies to bring their AI systems up to certain standards and stipulate heavy financial penalties for non-compliance.

- Implementing AI in accordance with the AI Act requires companies to take a strategic approach and work closely with legal, technology and operations teams.

- Proper implementation of the regulations will allow companies not only to comply with the law, but also to build customer trust and a strong competitive advantage.

AI threats and challenges – why does artificial intelligence need regulations?

With the rapid development of artificial intelligence, the number of potential threats and dangers that come with it – breaches of privacy, manipulation of information or algorithms that discriminate against certain social groups – continues to grow. AI is developing at such a rapid pace that legal regulations are unable to keep pace with it. The lack of clear rules and ethical standards leads to situations where AI can be used in harmful ways – hence the need to constantly adapt the regulations. This is why the European Union created the AI Act – the first comprehensive legislation regulating AI at the EU level.

AI Act – a guarantee of transparency, security and innovation

The AI Act aims to ensure user safety and protect human rights by introducing clear regulations for the development and implementation of AI. The main goals of the AI Act are:

- Ensure transparency – AI users need to be clear about how systems work and how they can be controlled.

- Protecting fundamental rights – prohibiting the use of AI in ways that violate human rights, such as in manipulation schemes or illegal facial recognition.

- Promoting innovation – creating a framework to enable the development of responsible and secure technologies based on artificial intelligence.

When do the first new regulations come into effect?

They started to apply in early February 2025. The European Commission published guidelines on prohibited practices related to artificial intelligence as defined by Regulation (EU) 2024/1689 AI Act. The full version of the act is available in 24 languages, plus there are tools such as AI Act Explorer, Summary of the AI Act or EU AI Act Compliance Checker to help understand the regulations.

The regulations will come into effect gradually from 2025 to 2027, and failure to comply can result in severe financial penalties – up to 35 million euros or 7% of a company's global turnover. That is why it is so important to familiarise yourself with the new regulations, analyse them carefully and prepare for their implementation.

Failure to comply with the new regulations could result in severe financial penalties of up to €35 million or 7% of a company's global turnover.

Since February 2, 2025, Chapters I and II (Articles 1-5) have been in effect, introducing key requirements for AI. Chapter I Article 3 introduces, among other things, a definition of AI, while Article 4 from the same chapter defines AI competencies. Chapter II, Article 5 describes what practices pose an unacceptable risk of violating fundamental rights, or in short, prohibited practices.

In addition, each EU member state is responsible for implementing the Artificial Intelligence Act into its national legal framework. To ensure compliance and enforcement, countries must establish a supervisory authority responsible for monitoring artificial intelligence activities, assessing compliance and imposing sanctions when necessary. National authorities will work with the European Commission and other regulators to maintain a consistent approach across the EU.

Current definition of AI – the key to understanding the regulations

To effectively comply with the AI Act, it is crucial to fully understand what artificial intelligence is according to the act. In Chapter I, Article 3, the AI Act defines AI as software designed to operate with varying levels of autonomy, generating results that affect physical or virtual environments based on machine learning methods, logical or statistical approaches. Such a broad definition allows both simple automation systems and advanced machine learning algorithms to be regulated.

AI system means a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments;

AI Act, Ch. I, Art. 3, Pt. 1

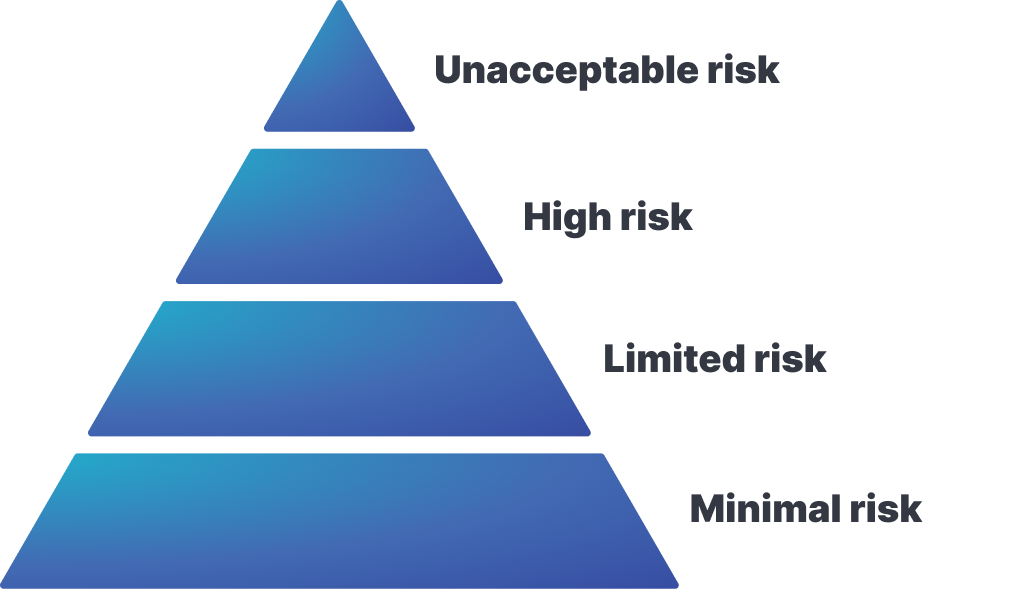

AI risk levels – what is allowed and what is not?

The AI Act defines different levels of AI risk.

- Unacceptable practices (e.g., manipulation, excessive surveillance systems) – are completely prohibited (Chapter II, Article 5).

- High risk (e.g., AI in recruitment, credit scoring) – systems with this level of risk require documentation, control and security obligations to be met (Chapter III, Art. 6, 8-17).

- Reduced risk (e.g., chatbots, content generators) – such systems should meet transparency requirements (users should be informed that they are talking to a bot and that content is being generated). Chapter IV describes what the responsibilities of suppliers and implementers of AI systems in terms of transparency are.

- Low or minimal risk – low-level systems do not require special restrictions.

Conclusion

Organisations prioritising successful digital transformation should keep abreast of evolving AI regulations – learning and adapting their AI systems to operate in a manner that complies with current regulations. The safe path in implementing ethical AI solutions is to choose an experienced and reliable technology partner that can provide your company with best practices in transparency, security and compliance, and implement optimal solutions that drive growth and profit for the company.